Dev Ops

Built solutions that can be deployed with ease and scale to handle high volumes of transactions using DevOps solutions like Docker containerization, orchestration, and scaling the solution through Kubernetes. Thereby ensuring robust, scalable, and high-availability applications.

Our expertise is on both Kubernetes native implementations and implementation using Rancher. Our solution helps organizations to achieve application scalability, continuous integration, ease of deployment, and version control of all the artifacts.

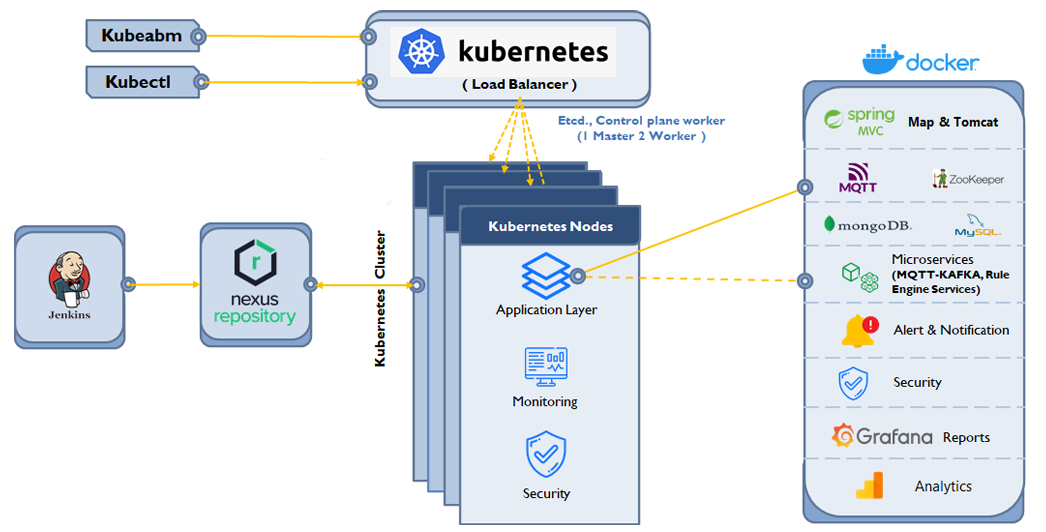

Case Study 1 - IOT Platform - Native Implementation

This native implementation is done for an IoT Platform which is a cloud-based solution. The platform is a centralized system to provide IoT device integration, group management, data visualization, machine hosting, OTA (Over-the-Air) updates, and microservices orchestration.

IoT Platform components are deployed as Docker containers which are managed and orchestrated by Kubernetes upstream. IoT Platform is configured to run 1 master node and 2 worker nodes.

Each component (microservices, Kafka, MQTT...) is containerized by creating a Docker image of that component. Using continuous integration tools like Jenkins, Docker images are built and uploaded to the Nexus Repository. The same image is pulled from the Nexus Repository to create the Docker container on host worker nodes.

For high availability and scalability, Kubernetes is used for container orchestration by managing these components. Containers in Kubernetes are scheduled as pods. These worker pods are managed and controlled from master nodes using YAML configurations. Using YAML configuration, the IoT Platform can be replicated in any environment. Huge/heavy modules (Kafka, MongoDB, MySQL) are segregated into different pods, while lightweight modules (3 microservices) are configured to single pods.

Kubernetes Deployment Controller: Continuously monitors the application instances which are running on the containers. If the node hosting an instance goes down or is deleted, the Kubernetes Deployment Controller replaces the instance(s) with another instance on another node in the cluster.

The Grafana Kubernetes App allows system administrators to monitor Kubernetes cluster performance.

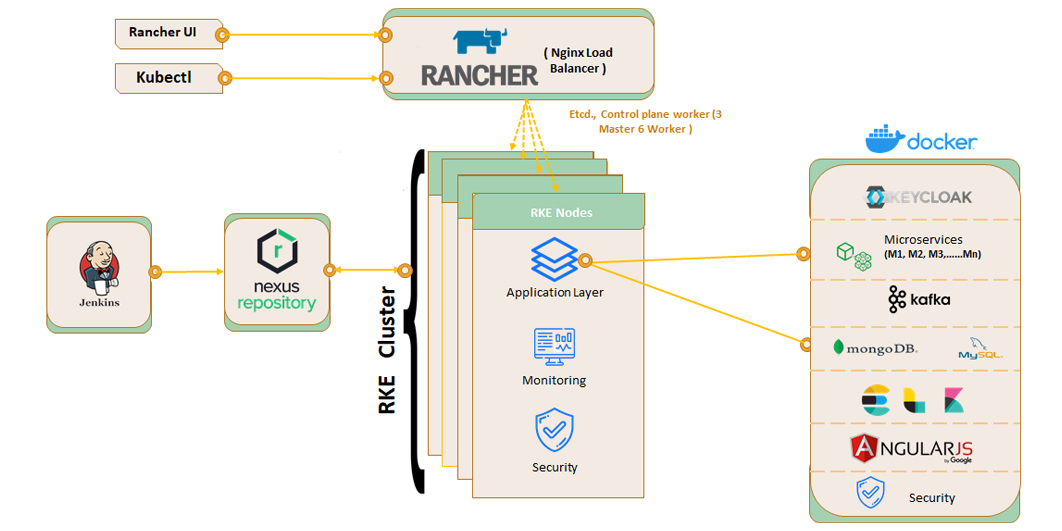

Case Study 2 - European Telecom Customer - Rancher Implementation

This Rancher implementation was done for a European Telecom customer for the 5G product. Implementation is using Rancher for a highly available and scalable solution. It also includes building a pipeline with Jenkins for easy and automated deployments.

Rancher Implementation provides integrated tools for running containerized docker workloads.

Rancher implementation is configured to run 3 master nodes and 7 worker nodes. Master nodes are also configured to run as worker nodes.

Rancher cluster is configured to use the RKE custom setup with nginx load balancer

Each component (microservices, GUI, mongo dB…) is containerized by creating a docker image of that component. Using continuous integration tool like Jenkins docker images are built and the same is uploaded to Nexus Repository. The same image is pulled from Nexus Repository to create the docker container on host worker nodes.

Each component (microservices, GUI, mongo dB…) will be deployed in two nodes with replica set as 1 on each node. GUI will be deployed in Nginx server configured on a pod. Load balancer is configured to forward the request to respective pods in the cluster distributing the load between the pods. Replicas of a pod can be scaled high or low based on the load on the pod.

The Rancher UI allows system administrator to monitor Rancher cluster’s performance